Breaking Down A.I.

It is 1962, and we have a crisis on our hands.

No, not the Cuban Missile Crisis. The Food-a-Rack-a-Cycle has stopped working.

Poor Jane Jetson! She is exhausted from just thinking about it. How will she ever get all that push-button housework done all by herself?

Jane pleads for a maid. “Not gonna happen!” husband George blusters. “The budget is too tight already!”

But Jane spots a free trial on daytime TV, and signs up for an “economy model” anyway.

To the audience watching in their living rooms on black-and-white TVs, Rosie the Robot seemed like an old friend. A sort of futuristic housemaid taken from another TV series of the era, “Hazel”. The difference was that Rosie not only cleaned house and offered sage advice and made herself part of the family. She could also play a mean game of basketball, crank out homework, cook a complete dinner from scratch, and even read Boy Elroy some bedtime stories like “The Cow that Degravitated Over the Moon”.

But Rosie was more than just a smart bucket of bolts. She and most all the other contraptions conceived on “The Jetsons” embodied faith and hope for a wonderfully wacky future. A future of automation lovingly styled as servants for humanity. A future of robots that started working right out of the box, endowed with can-do spirit and old-fashioned Irish wit.

Not to mention knobby eyes, antenna ears, a stout figure and a maid’s apron.

Oh, and one more thing. A dollop of native intelligence.

Luke Dormehl “Thinking Machines” (2017)

Computer optimism began in earnest during the war. After some postwar fits and starts, the military’s ENIAC was trumpeted as the “Giant Brain” of the 1950s.

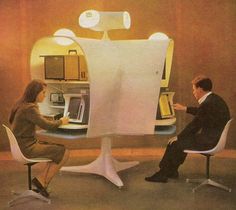

New York World’s Fair Exhibit 1964

By the early 1960s, the future was our oyster. The speed of innovation in engineering was dizzying. Satellites in orbit (Telstar) became a way to stream audio and video real time across the world. As science and technology took hold of the public imagination, no event stimulated the public appetite more than the New York World’s Fair in 1964. There were personal flying machines and video phones, and computers that could play games of checkers and chess with the visitors. In a somewhat less hyped sideshow, IBM showcased a computer that could translate both English to Russian and Russian to English in a matter of seconds.

Even so, much of what was ballyhooed still required a mainframe. No matter. Soon scientists were asking: could robots and machines learn? Robots were pitched as not only highly specialized “workers” but capable of even more. Computers could steer rocket ships and control power generators and draw maps upon a huge screen. In short, computers could talk to the real world.

And where did all this buzz about computerization of our lives seem to lead? To a notion of “intelligence”—not human, but artificial—the seeming capability of machines doing things that humans themselves were already good at.

So here we are, sixty years hence. And why aren’t we there yet? What kinds of machines have we actually begotten anyway? Can we find out how they actually work?

Warning

The remainder of this post may be detrimental to your optimism.

Continue at your own risk

The Artificial Intelligentsia

It was Alan Turing who coined the phrase “Thinking Machine”—the same man who designed a way to break the encrypted Nazi codes. Turing later wrote of his idea of a Universal Machine that should be capable of performing many different tasks, and he published a paper on “Intelligent Machinery.” We can also thank him for the theoretical “Turing Test,” an analytical approach to determine whether or not a machine can be said to have intelligence (basically, if a human interrogator cannot determine whether he is talking to a machine or a human, the machine “wins”).

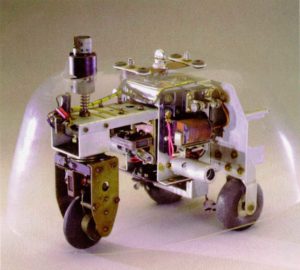

Machina Speculatrix (1951)

The optimism was not his alone. In 1951 William Grey Walter invented Machina Speculatrix, three-wheel robots that explored their environment with physical and visual sensors, and reacted to various obstacles and even steered themselves toward light sources when running low on battery—sort of an early version of the Roomba vacuum.

In the summer of 1956, the first official gathering of computer scientists and futurist intellectuals took place on the campus of Dartmouth College. The “Dartmouth Conference” brought together academics from many disciplines to announce their intentions for this newly developing discipline. In their declaration, they wrote:

The study [assumes] that every aspect of learning or any other feature of intelligence can be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve the kinds of problems now reserved for humans, and improve themselves. -p.13

The conference also included Marvin Minsky, who is now considered one of the fathers of “Artificial Intelligence” (he died in 2016). Minsky believed the problem of machine intelligence would be “substantially solved” within a generation.

When AI emerged, Minsky made it his lifelong passion, promoted the concepts in popular magazines as well as academic journals, and personally spearheaded the comeback of A.I. in 1994 with his famous article in Scientific American, “Will Robots Inherit the Earth?” which posits that we will eventually replace ourselves with machines.

There are many other men and women whose contributions to information theory and algorithmic problem solving are mentioned in this book. Their contributions can (and do) fill many other books. It is in the wake of their optimism that the science has soldiered on, sometimes making headlines, sometimes taking years to solve problems. But persist they did.

Intelligence: A New Vision

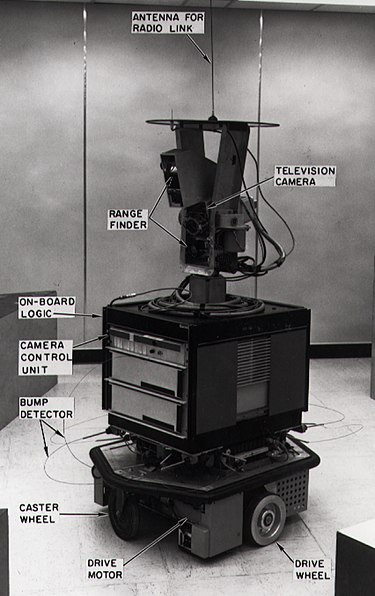

In 1972 Life magazine wrote up a feature on SHAKEY the robot who the magazine referred to as the world’s “first electronic person.” SHAKEY, the article continued, could “travel about the moon for months at a time without a single beep of direction from the earth.” Such cyperbole, as Dormehl puts it, was typical of the press at the time, and complete poppycock.

SHAKEY (1970)

Although most robots today are very real, they are not multipurpose machines. They are, simply put, combinations of heavy-duty arms and legs and eyes and ears and gears and switches controlled by semiconductors and gauges that can communicate with a program running on a CPU. They are built and dedicated to perform some very arduous and repetitive tasks quite well. Some might take a scan of your luggage as you walk through security. Others might have “brothers” and “sisters” helping them assemble a car. When well-oiled and electronically jazzed, they’re known to be more reliable than most of us, even if one of us is required to babysit. Best of all, they don’t need a minimum wage, they don’t call in sick, they don’t sue.

But unless they need to learn something, or learn from experience, or even teach you something—your typical robot is not, generally speaking, possessed of A.I.

Truly it is the words “intelligence” and “thinking” and “knowledge” that, when applied to machines, seems a bit of a stretch … like some anthropomorphic mistake. By nature we reason that machines aren’t “beings.” They don’t have the attributes of personality and mental state and consciousness that we normally associate with those other higher qualities.

But an intelligent thing? Might it be? Descartes may have been the first to work this out because he basically proposed that if you can think for yourself, then you know you have something going on inside. But can machines know they exist? Can they meditate or experience pain? Can they be happy or sad?

No, most of the time they just do things. But they do things in different ways. They may do only what they’re programmed to do. Or, better yet, as A.I. researchers would say, they may they learn as they go.

How Is It Done?

So if a robot or a machine is said to be learning or thinking—analyzing, mulling it over, looking for clues, grasping at straws—how’s that happen exactly?

Originally A.I. came down to inputs, outputs, and the top-down program in between. In the period of the 1960s and 1970s, most A.I. research was comprised of such designs, which is to say applying logical decision-making to a complex environment of input data, filtered through conditions that were preloaded into the program.

It stands to reason then, that over time this created top-heavy implementations. Any effort to make a chess-playing machine into a multi-game machine would be ruinous due to the size of the program modules that must be simultaneously loaded in memory.

If this older process could be defined as “supervised learning” then a new approach was needed: “unsupervised learning.” Eventually this new approach was dubbed “Deep Learning.”

If you are wondering why Facebook can pick you out from among a pixel pile of facial photos, look no further than Deep Learning. If you are wondering why your smart phone now understands and correctly recognizes what words you are speaking, loudly or softly, regardless of your accent, that’s because Google switched its Android operating system over to a deep learning platform in 2012—immediately reducing its error rate by 25 percent.

If you are wondering why Facebook can pick you out from among a pixel pile of facial photos, look no further than Deep Learning. If you are wondering why your smart phone now understands and correctly recognizes what words you are speaking, loudly or softly, regardless of your accent, that’s because Google switched its Android operating system over to a deep learning platform in 2012—immediately reducing its error rate by 25 percent.

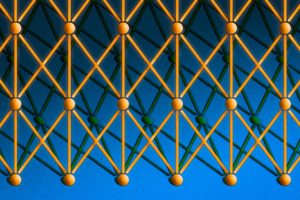

For the fun of it, let’s see what it would look like to the computer. We’ll start with the fundamental characteristics of an image and see if we get anywhere. And let’s think of our approach as a layered learning process. Each logical layer starts with “feature detectors” that take some part of the image and try to recognize it.

In trying to identify what’s in a photo, for example:

… the first layer [of machine analysis] may be used to analyze pixel brightness. The next [logical] layer then identifies any edges that exist in the image, based on lines of similar pixels. After this, another layer analyzes textures and shapes, and so on. The hope is that by the fourth or fifth layer, the deep learning net will have created complex feature detectors. At this stage, the deep learning net knows that four wheels, a windshield and an exhaust pipe are commonly found together, or that the same is true of a pair of eyes, a nose and a mouth. It simply doesn’t know what a car or a human face is. -p .51

So the machine does not really know the content of the picture. Nor does it need to, for as Geoff Hinton says: “The idea was that you train up these feature detectors, one layer at a time, with each layer trying to find structural patterns in the layers below.”

Of course at each layer the machine must have a reference by which sorting can be considered “intelligent”. Which leads us to the inevitable, head-scratching question: whether the analysis is top-down or bottom-up, does A.I. really signify knowledge—or is this simply a trick of recognition?

Elementary, My Dear

Make no mistake. Machines are learning. Right now, a little at a time. Robots can now cook a simple meal after having seen “how-to” videos on YouTube. In the near future on a trip to Moscow you will be able to speak English commands into your phone and play them back in Russian to your Uber-owski cab driver.

Applauding Watson on “Jeopardy!” (2011)

In February 2011, the computer/robot/A.I. “Watson” was finally ready to face off between two former champions on the game show “Jeopardy!”

It had taken IBM more than a few years to prime Watson’s deep learning: how to recognize parts of questions, how to interpret puns in the phrasing, and how to ferret out irrelevant hits. The impressive win was only topped by Ken Jennings’ rejoinder, a line from “The Simpsons” written on his answer board: “I for one welcome our new robot overlords.”

The spin just keeps on spinning. In five short years we now have a “Chef Watson”—an online assistant whose job it is to spit out computer-generated recipes, completely made up on the spot, and tailored to the likes and whims of your request. Simply install the app on your phone, give Chef Watson your preferences and a list of what’s left in your fridge. The virtual chef will deliver a quick and easy recipe in record time.

Creative Assistants

I, Robot (2004)

Speaking of Creative Assistants, one such “being” cast a spell upon Will Smith in the sci-fi thriller “I, Robot” (2004). During one of the more thoughtful scenes in that movie, the question arises: can a robot compose an original symphony from its knowledge of symphonic scores? Can it create a work of art upon a blank canvas?

Needless to say, both have been done. But to examine how it’s done, we must first imagine ourselves inside the creative machine whose “experience” with its chosen field is pretty much everything.

Backstory first. About ten years ago Google engineers knew that A.I. must first get to know the essence of an object. They would ask their A.I. to create an image of an ideal object by surveying that vast “knowledge” of things—in this case photos. The project was “Deep Dream.” They began by allowing the machine to experiment, as it were, picking out parts of the object from other parts. In other words, give your A.I. about 10 billion images to work with, and see what’s gonna happen. Well, here’s what happened when Deep Dream first attempted to create an image of a dumbbell.

Left unassisted, the neural network had become confused: it discovered unusual relations in Google’s 10 billion-plus images that make it difficult to work out where an object starts and stops. As a result, Google’s Platonic objects sprouted some unusual appendages, such as long fleshy arms that hung from Deep Dream’s idealized dumbbells like pink lengths of rubber tubing. [The engineers blogged:] “There are dumbbells in there all right, but it seems no dumbbell is complete without a muscular weightlifter in there to lift them.” p.155

Mistakes? No, Dave, I’m afraid machines don’t make mistakes. Instead they experience “training mishaps” and/or “fascinating feedback loops”. In a nutshell, it is the way machines must earn their keep by learning the heap: i.e. plowing through more and more thickets of neural nets. What we used to call trial-and-error? Yep, only on a massive scale.

And yet, like us, they have a ceiling. At their first try on other objects:

Clouds were associated with birds …. Trees became ornate buildings, leaves became insects, and empty oceans became cityscapes. p.155

For a mere machine without this higher level of referential integrity, there can be no intelligence. (There is no perceived difference between a dumbbell and a dumbbell with an arm.) So it is the engineers … not the machines … who really become smarter. Engineers must build in either (1) a layer of more specific objects (dumbbells without arms) or (2) the ability to distinguish the arms from the dumbbells. Engineers tinker with the layering, machines learn. Engineers design new algorithms to distinguish subtle differences, ultimately the robots reap the applied knowledge.

Actually there’s a name for this process. It’s called heuristics. In science it is fundamentally a problem-solving approach, but can be applied to any method of investigation. For this approach to work, the odd thing is that machines now are said to “have experience”. It seems a shame that a machine’s ability to pinpoint lookalikes in the interstices of its neural net is roughly the equivalent of “having experience,” but such morphing of metaphors is now the hallmark of A.I. hucksterism.

The Beatles Reunion

Star Wars (1977)

A.I. bragging kind of goes like this: take a very well-defined real world success, and from it invent a future development. Here is the story of computer science professor Lior Shamir whose machine sampled all the songs in the Beatle’s songbook: it analyzed pitch, tempo, and other patterns, and then correctly grouped the songs by album. Our scientist then conjectured:

“The computer will be able to compose songs based on the heuristics that you would find on that album,” he said. “That may mean using the same scales, time signatures, musical instruments, or whatever. Once you’re able to determine what makes a song novel, then generating that same novelty is just a matter of computing cycles. You might not wind up with a hit immediately, but have the computer generate it again and again and again, and you’ll eventually get there.” p. 162

That simple huh? Professor Shamir goes on to say we should be enjoying a new Beatles album by the year 2026. Well if CGI can create a young Audrey Hepburn for a new Dove Bar commercial, why can’t the Beatles be recreated in concert?

“People like to mythologize creativity like it’s just about a momentary flash of divine inspiration. It took Paul McCartney two years to finish the song “Yesterday” [or so claims Shamir]. There’s a process. Creativity is about heuristics. It’s about evaluating different paths and decisions until the right one is discovered. That’s where A.I. comes in.” p.165

Our Robot Coworkers

Allow me to introduce EVE, a “robot scientist” who is happily and quietly working away on drug discoveries. EVE is the offspring of another robot named ADAM. The place she calls home? The University Manchester Institute of Biotechnology. For her efforts she is well taken care of.

Ex Machina (2016)

EVE spends her days working out the testing protocols for new drugs. In her spare time, she comes up with hypotheses about what to test. She also helps out in the testing and reporting of results. EVE is quite the team player, a model multitasker. Not to mention: excluded from the pension plan.

Should EVE get an idea of her own, the world may be turned on its head. They keep thinking that she might. This could not only put some scientists out of work (shhhhh!), but perhaps more importantly begs the question: who gets the patent? The company, the research facility, or the robot?

Where Legal Meets the Illogical

A measure of innovation is its patent-ability. And the small print at the U.S. Patent and Trademark Office says that such a product must be deemed an “illogical step.”

If, as they say, AI is still in its infancy, at the Patent Office it’s childhood resume is impressive. A.I. has begun moving into design fields in all areas of technical product development: electrical circuitry, optics, software repair, civil engineering and mechanics. Sci-Tech makes a convenient port of call for A.I., since the rules are already well-known, and many are universally accepted and commonly-digitized.

But in attempting to put a claim to a patent, the model of A.I. building may also be something of an albatross. John Koza, one of the fathers of “evolutionary algorithms” (automated invention machines) has laid bare the legal conundrum that will be faced by future AI developers.

Dormehl writes:

[Koza’s] algorithms have created around seventy-six designs able to compete with humans in [various engineering fields]. In most cases, the designs had already been patented by humans. The computer had gotten there too late—although the fact that it came to the same creative solution with no prior knowledge of the work in question shows its value in the design process.

Koza explains:

“What the patent office means by an “illogical step” is that it is more than something you would inevitably think of, were you to plod along working on something based entirely on the past.”

-p.176

Dormehl glosses:

Why does that matter? Because, Koza says, if computers are only good at performing logical operations, how can they take an illogical step?

Such Progress

Wall-E (2008)

We have all seen children “learning” as they swipe their tablets and iPhones to select from a puny menu of preselected choices. We have witnessed the effects of the “knowledge” that comes from it (basically educational anemia). So we now have fewer qualified candidates for everything, and our tech-nation must import talent from India and China.

But robots, once envisioned as useful, highly specialized automated servants with accurate and repetitive behaviors like riveting and gluing and talking and walking and driving, now can do even neater tricks like cooking and delivering UPS packages—perhaps even, one day, real inventing.

On our way to this new brave world, it sometimes seems that the whole thing has become a kind of automated carnival show: computers mimicking human traits have become the shadow puppets of our CGI era. The lion’s share of A.I. hype today is how machines will first mimic and then improve upon our inadequate human abilities.

A.I. development is still tightly focused on knowable tasks, especially in the direction of (and funded by) military planning. We are finally going from the known-knowns to the known-unknowns. In the short term, think driverless Humvees, or bots that replace trolls, and who can devise and dream up their own “fake news” in hundreds of different languages. In the long run, we’ll have algorithms that immediately identify a terrorist. Will any of these new technologies be used for the common good—the exploration of Mars, for example?

Currently the degrees of progress on the bar of intelligence are measured in pixel puffs of speech and images. Whatever “deep learning” is, whatever “knowledge” computers have so far acquired for their own benefit, we still currently rely on gigantic back-end computer systems to sift through the evidence. The methods employed have been largely a process of logical elimination. But that too is changing.

The future is now under development: “mind clones” (3D avatars that will act just like us), “personality capture” (memory and person preservation for future generations), and “virtual immortality”—and yes, all have their sponsors around the world.

And there are several “Brain transfer” projects, too. Witness the “MICrONS” project at Carnegie and other institutions, a multi-level research effort to “reverse engineer” biologic brain algorithms primarily for the purpose of defense industry.

Ethics: A Final Note

There are simply too many issues to cover here. We could discuss Issac Asimov’s three laws of robotics, or delve into the marriage of biology and cybernetics.

But if you’re into computers, you might also take a look at “Heart of the Machine: Our Future in a World of Artificial Intelligence” (2017)—a terrific read, penned by futurist Richard Yonck, but mainly for the nerdy-enough who care about automated emotional interfaces and their development. The flyleaf asks the question: What if your computer knew how you felt?

Yet ethics looms large here. And where in all this are the Luddites? Where are the prophets of doom? Whilst Elon Musk and Stephen Hawking have both spoken out, the rest of us remain curiously impressed by driverless cars and “smart” deadly drones and the portent of a RoboCop future.

In China and Japan and now the western world, millions of youth are falling victim to, and increasingly captive of, this new addiction: virtual reality games. Based on my reading it seems to me “machines” may soon be applying in the court of public opinion to become a greater class of “being”—alongside, but certainly distinct from “beings” known throughout our literature and history as merely “human.”

In the movie “Lo and Behold: Reveries of the Connected World” (2016), Werner Herzog interviews a young researcher who is working on robots playing soccer. This engineer’s goal? To defeat FIFA’s champions by 2050. Now that is truly a noble cause.

Certainly computers don’t yet have consciousness or soul-worthy intentions. Nevertheless, they are being hyped as if they can walk and talk among us, sort of as if they were metallic clones of our biologic best selves. Supposedly consciousness is on yonder horizon. Soon A.I.s are said to contribute inventions that us mere humans haven’t thought of. Soon they create symphonies that will one day become worthy of some poor sod’s attention. Soon they invent every new thing under the sun. Heaven help us.

Leave a Reply